This is part three of our series on programming in the modern computer age. Last time, we discussed the rise of user-oriented languages. We now report on the latest of them and why it's so exciting.

by Gideon Marcus

Revolution in Mathematics

The year was 1793, and the new Republic of France was keen to carry its revolution to the standardization of weights and measures. The Bureau du cadastre (surveying) had been tasked to construct the most accurate tables of logarithms ever produced, based on the recently developed, more convenient decimal division of the angles. Baron Gaspard Clair François Marie Riche de Prony was given the job of computing the natural logarithm of all integers from 1 to 200,000 — to more than 14 places of accuracy!

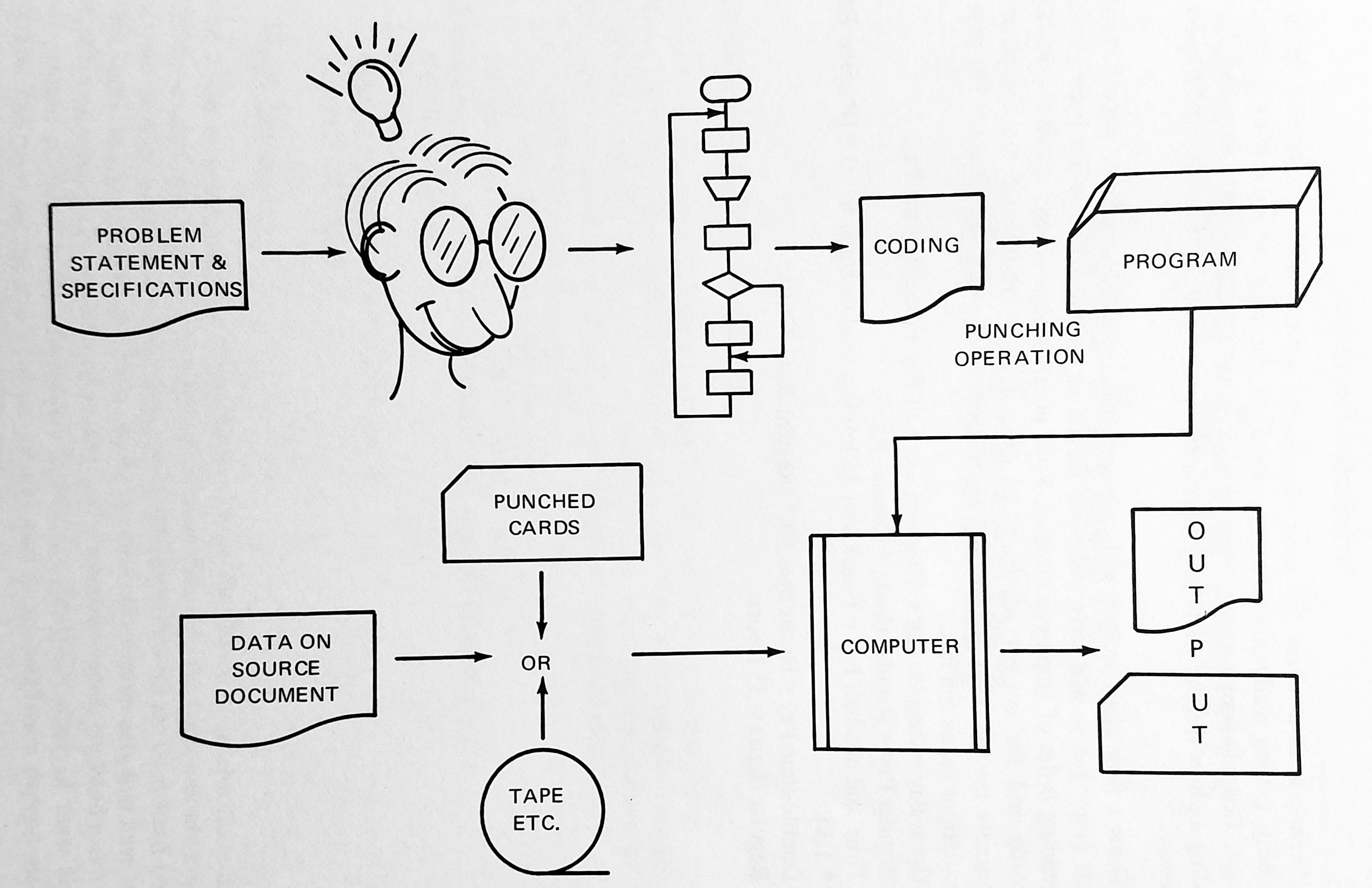

Recognizing that there were not enough mathematicians in all of France to complete this project in a reasonable amount of time, he turned to another revolutionary concept: the assembly line. Borrowing inspiration from the innovation as described in Adam Smith's Wealth of Nations, he divided the task into three tiers. At the top level were the 5-6 of the most brilliant math wizards, including Adrien-Marie Legendre. They selected the best formulas for computation of logarithms. These formulas were then passed on to eight numerical analysts expert in calculus, who developed procedures for computation as well as error-check computations. In today's parlance, those top mathematicians would be called "systems analysts" and the second tier folks would be "programmers."

Of course, back then, there were no digital machines to program. Instead, de Prony assembled nearly a hundred (and perhaps more) human "computers." These men were not mathematicians; indeed, the only operations they had to conduct were addition and subtraction! Thanks to this distributed labor system, the work was completed in just two years.

The Coming Revolution

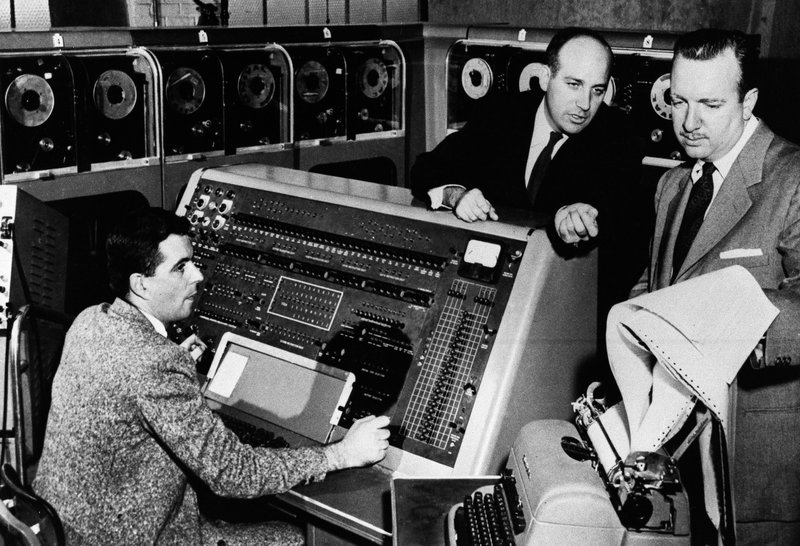

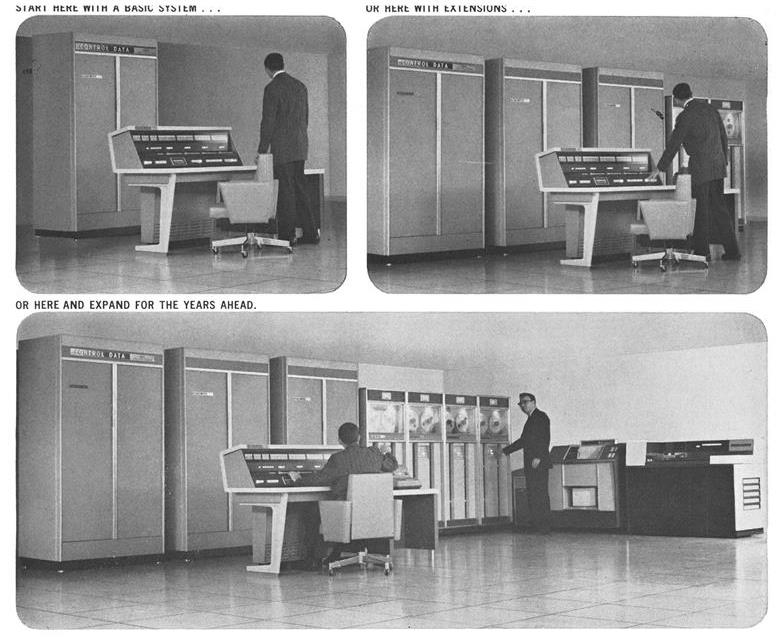

These days, thanks to companies like IBM, Rand, and CDC, digital computers have become commonplace — more than 10,000 are currently in use! While these machines have replaced de Prony's human calculators, they have created their own manpower shortage. With computation so cheap and quick, and application of these computations so legion, the bottleneck is now in programmers. What good does it do to have a hundred thousand computers in the world (a number being casually bandied about for near future years like 1972) if they sit idle with no one to feed them code?

As I showed in the first article of this series, communication between human and the first computers required rarefied skills and training. For this reason, the first English-like programming languages were invented; they make coding more accessible and easier to learn.

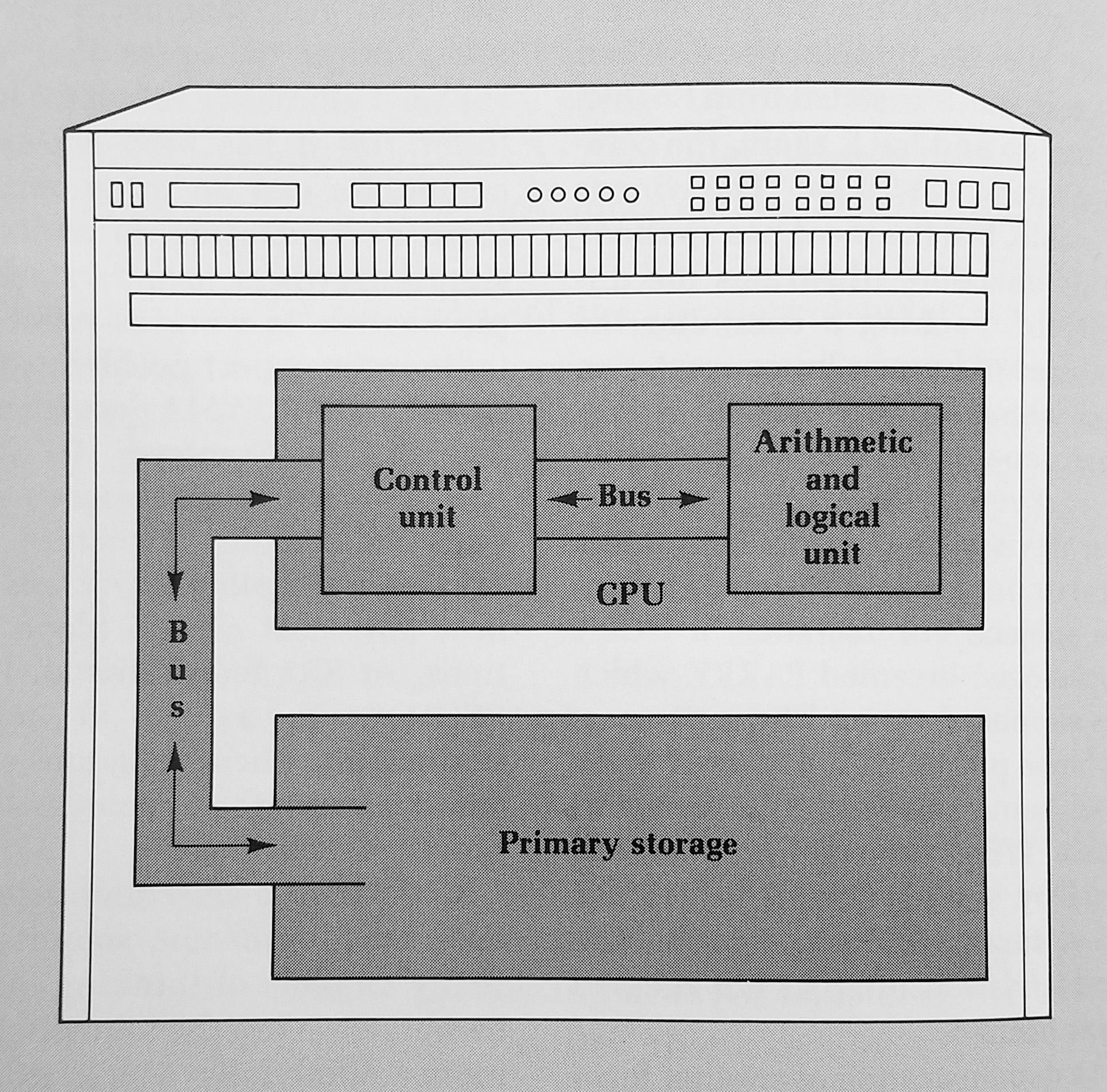

But developing programs in FORTRAN or COBOL or ALGOL is still challenging. Each of these languages is specialized for their particular function: FORTRAN, ALGOL, and LISP are for mathematical formulas, COBOL for business and record keeping. Moreover, all of these "higher-level" programming languages require an assembly program, a program that turns the relatively readable stuff produced by the programmer into the 1s and 0s a computer can understand. It's an extra bit of work every time, and every code error that stalls the compiler is a wasted chunk of precious computer time.

By the early 1960s, there were folks working on both of these problems — the solution combined answers to both.

BASICally

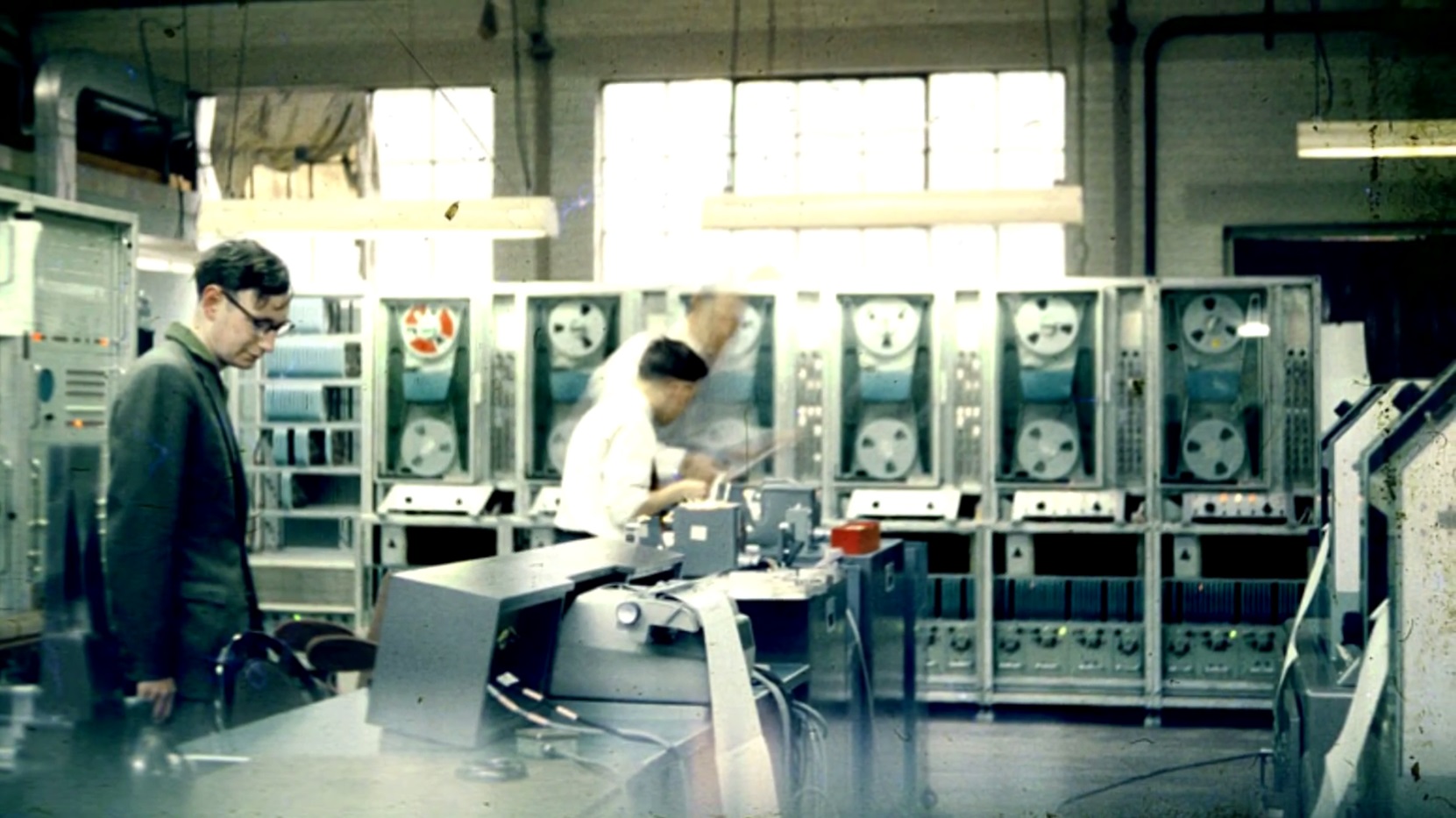

In 1963 Dartmouth Professor John Kemeny got a grant from the National Science Foundation to implement a time-sharing system on a GE-225 computer. Time-sharing, if you recall from Ida Moya's article last year, allows multiple users to access a computer at the same time, the machine running multiple processes simultaneously.

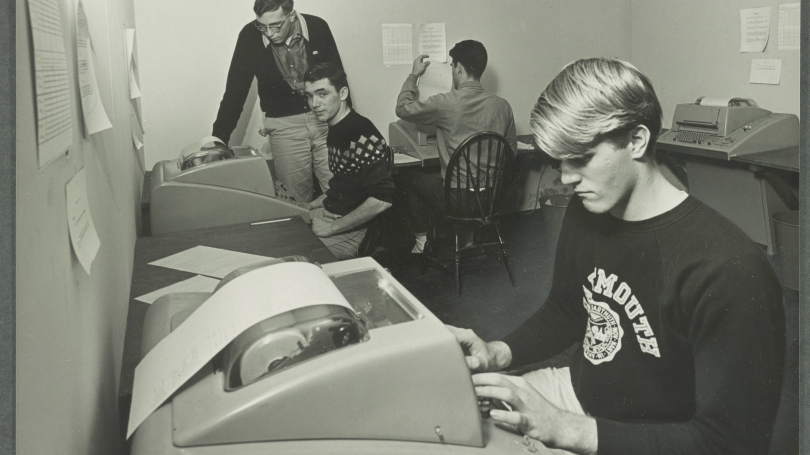

Photo Credit: Dartmouth College

Kemeny and his team, including Professor Thomas Kurtz and several undergrads, succeeded in completing the time-share project. Moreover, in the interest of making computing available to everyone, they also developed a brand-new programming language.

Beginner's All-purpose Symbolic Instruction Code, or BASIC, was the first language written specifically for novices. In many ways, it feels similar to FORTRAN. Here's an example of the "add two numbers" program I showed you last time:

5 PRINT "ADD TWO NUMBERS"

6 PRINT

10 READ A, B

20 LET C=A+B

30 PRINT "THE ANSWER IS", C

50 PRINT "ANOTHER? (1 FOR YES, 2 FOR NO)"

60 READ D

70 IF D = 1 THEN 6

80 IF D = 2 THEN 90

90 PRINT

100 PRINT "THANKS FOR ADDING!"

9999 END

Pretty easy to read, isn't it?

You might notice is that there's no initial declaration of variables. You can code blithely along, and if you discover you need another variable (as I did at line 60), just go ahead and use one! This can lead to sloppy structure, but again, the priority is ease of use without too many formal constraints.

Indeed, there's really not much to the language — the documentation for BASIC comprises 23 pages, including sample programs.

So let me tell you the real earth-shaking thing about BASIC: the compiler is built in.

On the Fly

Let's imagine that you are a student at Stuffy University. Before time-sharing, if you wanted to run a program on the computer, you'd have to write the thing on paper, then punch it into cards using an off-line cardpunch, then humbly submit the cards to one of the gnomes tending the Big Machine. He would load the FORTRAN (or whatever language) compiler into the Machine's memory. Then he'd run your cards through the Machine's reader. Assuming the compiler didn't choke, you might get a print-out of the program's results later that day or the next.

Now imagine that, through time-sharing, you have a terminal (a typewriter with a TV screen or printer) directly attached to the Machine. That's a revolution in and of itself because it means you can type your code directly into a computer file. Then you can type the commands to run the compiler program on your code, turning it into something the Machine can understand (provided the compiler doesn't choke on your bad code).

But what if, instead of that two-step process, you could enter code into a real-time compiler, one that can interpret as you code? Then you could test individual statements, blocks of code, whole programs, without ever leaving the coding environment. That's the revolution of BASIC. The computer is always poised and ready to RUN the program without your having to save the code into a separate file and run a compiler on it.

Kemeny watches his daughter, Jennifer, program — not having to bother with a compiler is particularly nice when you haven't got a screen! Photo Credit: Dartmouth College

Moreover, you don't need to worry about arcane commands telling the program where to display output or where to find input (those numbers after every READ and WRITE command in FORTRAN. It's all been preconfigured into the program language environment.

To be sure, making the computer keep all of these details in mind results in slower performance, but given the increased speed of machines these days and the relatively undemanding nature of BASIC programs, this is not too important.

For the People

The goal of BASIC is to change the paradigm of computing. If Kemeny has his way, programming will no longer be the exclusive province of the lab-coated corporate elites nor the young kooks at MIT who put together SPACEWARS!, the first computer game. The folks at Dartmouth are trying to socialize computing, to introduce programming to people in all walks of life in anticipation of the day that there are 100,000 (or a million or a billion) computers available for use.

Vive la révolution!

Photo Credit: Dartmouth College

If you've read it this far, do me a favor and GOTO here — it's important!

![[May 10, 1965] A Language for the Masses (Talking to a Machine, Part Three)](https://galacticjourney.org/wp-content/uploads/2020/05/650510jennifer-662x372.jpg)

![[January 2, 1965] Say that again in English? (Talking to a Machine, Part Two)](https://galacticjourney.org/wp-content/uploads/2020/01/650102comp-672x372.jpg)